Written by Scott Wilson

If you haven’t gotten confused at least once about the differences between artificial intelligence (AI) and machine learning (ML), you haven’t been keeping up current events.

The explosion of generative AI such as DALL-E and ChatGPT has flooded the news and social media. As often as not, the person making the post or writing the story uses machine learning and artificial intelligence as interchangeable terms.

Once you’ve been blasted with more than a handful of these stories, it’s almost impossible to keep anything straight.

If you’re at all interested in what’s really going on in the mind of the machine, though, it’s important to recognize the distinctions. And if you’re looking to build a career in AI, you’d better know what machine learning is… but you’d also better never confuse the two.

Why It’s Tough to Tell the Difference Between AI and ML

The distinction is harder to keep straight because the terms are certainly in the same family. There wouldn’t be AI as we know it today without ML, and ML wouldn’t have emerged outside the pursuit of AI.

The distinction is harder to keep straight because the terms are certainly in the same family. There wouldn’t be AI as we know it today without ML, and ML wouldn’t have emerged outside the pursuit of AI.

Depending on how you look at them, ML is only a subset of the larger field of artificial intelligence, or AI may be just one application for the broader tool of machine learning. Opinions differ!

Another part of the difficulty in understanding how these terms are commonly used is that the meanings themselves are in rapid flux. Many observers and commentators on AI—which is now basically everyone—throw the terms around pretty loosely.

That is shaping perceptions and definitions in different ways. English is a dynamic language. Like cloud computing, the terms may eventually drift a long way from their original meanings just through common, if incorrect, use.

Marketing departments have been watching these terms skyrocket up the ranks of popular search phrases and are busily stenciling AI and ML on every product in their inventory… whether the descriptions are accurate or not.

But once you truly understand the origins and meaning behind both terms, you’ll see the relationship, but never get them confused again. So it starts with definitions, and a short detour into family history.

Machine Learning Was Created to Develop Artificial Intelligence

Machine learning is a computational technique.

It relies on building mathematical models from large sets of data. With a limited set of initial instructions, ML algorithms rebuild their own behavior as they classify information or develop predictions based on that dataset.

Machine learning is a method that was originally developed specifically for use in artificial intelligence research, but it has since found many applications not directly related to the field.

ML techniques are regularly used in data science to analyze, clean, and sort very large and disparate data sets. ML is behind many product recommendation engines, from YouTube to Amazon. If you have ever received a credit card fraud alert because of suspicious activity, it was an ML-driven system that created it.

One key limitation of machine learning is that the scope is always limited. The algorithm may shift, but the only tool it can bring to bear in analysis is statistical. Other types of reasoning or calculation aren’t part of the package. An ML algorithm designed to recognize traffic and road features will not suddenly teach itself to read. It can, however, develop a recognition of signs printed with letters like “S T O P” and “R I G H T T U R N O N L Y” with ease, though there’s nothing in it to connect the concepts of letters and language.

Artificial Intelligence Is Largely Powered by Machine Learning

Artificial intelligence is the concept of developing computational techniques that allow machines to reason and solve problems on par with human minds, or even to exceed those capabilities.

The idea that machines could be created to think like humans think pre-dates the invention of the computer.

Since computers have become common, though, it has gained traction. That has led to a lot of different approaches to programming machines to achieve that feat of cognition.

Since computers have become common, though, it has gained traction. That has led to a lot of different approaches to programming machines to achieve that feat of cognition.

It’s a necessarily fuzzy concept, since human intelligence is general. A human can’t do everything, but they can attempt anything.

There are various facets of AI that are more limited (sometimes called weak AI) in nature, but all share the characteristic of adaptability within their field. Visual processing systems, for example, can take in and attempt to recognize any sort of image. They may not always get it right, but they have the ability to adapt and make inferences.

In scope, artificial intelligence has no limits. In fact, the field’s overarching goal is to remove the limitations that computers face today. It’s about combining various sensory, computational, and analytical techniques to allow computers to adaptively solve problems of deep and variable complexity.

How Artificial Intelligence and Machine Learning Became the Magic Combination of AI Engineering

Machine learning may be the neatest trick that AI researchers have developed in the quest for useful artificial intelligence.

Machine learning may be the neatest trick that AI researchers have developed in the quest for useful artificial intelligence.

But it’s not even a new trick.

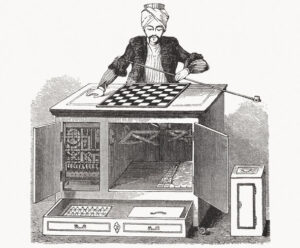

The term machine learning was coined by IBM’s Arthur Samuel in 1959. Early AI researchers by that point had confronted the inevitable difficulty of teaching machines to think… there’s an awful lot to teach. And it becomes exponentially difficult for a person to program in the code needed to retain and analyze it as the amount of information grows.

How Machine Learning Algorithms Came to Resemble the Human Brain

Early ML efforts focused on linear logic — the basic if/then sequences taught in every programming class in the country. Layered deeply enough, it was thought this basic technique, performed quickly, could ultimately learn to overcome any problem.

Early ML efforts focused on linear logic — the basic if/then sequences taught in every programming class in the country. Layered deeply enough, it was thought this basic technique, performed quickly, could ultimately learn to overcome any problem.

But such methods had inherent limitations when brought to the messiness of real-world data. To crunch and learn from fuzzy information, scientists turned to another field that was making leaps and bounds in the 60’s and 70’s… neuroscience.

Even before WWII, psychologists had developed theories about the relationship of neural activity in the human brain and learning and information retention. Theorists like Turing and Rosenblatt connected those concepts to the world of computation, imagining brains as computers and vice versa.

Nodes in a machine, performing basic, weighted computations, could be created to mirror the simplicity of the on/off synapses of the human brain. Each node is programmed to answer a very specific question about data. Together, in very large numbers, those answers could combine to perform astounding feats of recognition and inference.

But the computers of that era couldn’t hold enough data or process it quickly enough to test the theories.

By the 1990s, though, computational power and converging developments in understanding neural nets got to the point that computers could teach themselves to read handwritten zip codes. And by 2011, deep neural nets were coming close to processing visual input as perceptively as a human.

But if you could somehow get the machine to teach itself, to learn from data it was presented starting with only a few basic rules, then the obstacle became much less intimidating. After all, computers don’t get tired, they perform operations at many times the speed of a human, and they can process vast amounts of information. A self-teaching computer could be formidable.

Then, too, learning is an important subset of intelligence generally, so developing a machine that could learn on its own seemed to check a box toward the ultimate development of a generalized artificial intelligence.

So Samuel taught an IBM 701 to play checkers. It started to beat him. By 1962, it was beating championship checkers players.

But there was a problem… in 1959, computers were still running off punched tape and performing a paltry 100,000 operations per second. Although early experiments in ML were promising, the machinery of the day was incapable of running anything like a neural network. Samuel could come at checkers with basic search tree algorithms and rote learning techniques only because it was so simple.

The AI Winter Saw a Divergence in the Paths of ML and AI

Those limitations helped split ML away from AI for a time. In the 1970s and ‘80s, the AI field focused on basic logic branching and expert systems programming. Funding dropped as predicted breakthroughs failed to materialize, leading to a period known as the AI winter. Progress toward artificial intelligence slowed dramatically.

Those limitations helped split ML away from AI for a time. In the 1970s and ‘80s, the AI field focused on basic logic branching and expert systems programming. Funding dropped as predicted breakthroughs failed to materialize, leading to a period known as the AI winter. Progress toward artificial intelligence slowed dramatically.

In the meantime, ML was discovered by statistical analysts and then became one of the foundational methods in the field of data science. It continued to thrive and break new ground in self-training algorithms. Multi-layer neural networks, a concept that had been envisioned in machine learning theory as far back as the 1960s, became practical by the 1990s.

As both computers and data storage expanded and sped up, ML and AI are back in the fold.

Machine learning has become one of the most prolific techniques used in the support and development of AI. That popularity is part of the reason the two terms are so commonly confused.

Today, the kind of ML most commonly used in direct AI practice is called Deep Learning. That’s an outgrowth of the layered neural networks envisioned back in the ‘60s and ’70s. Both conceptual and processing advances have made them the tool of choice for many modern AI projects.

Deep learning layers neural nets to iteratively chunk through data toward some final result. Facial recognition is one tool that has made use of the technique. The bottom layer, incorporating the simplest functions, reads the basic elements of the picture… boundaries between elements, matching patterns.

But once that step is complete, the image is passed up a layer, where more complex functions can occur—recognizing hair color, say, or matching nose shapes. Those steps wouldn’t be possible without the deeper processing happening first.

Machine Learning May Find New Life at the Core of Artificial Intelligence Development

Although ML is probably the most popular, and one of the most successful, techniques used in AI R&D today, it’s not the only one. That’s part of the reason it’s important not to confuse the two concepts. AI is not completely dependent on machine learning. It’s just one tool in the box.

Although ML is probably the most popular, and one of the most successful, techniques used in AI R&D today, it’s not the only one. That’s part of the reason it’s important not to confuse the two concepts. AI is not completely dependent on machine learning. It’s just one tool in the box.

But ML has come around again to the forefront of what many scientists think could lead to the next generation of AI. That revolves around a better understanding of what intelligence looks like in its native habitat… biological life.

As you get deeper into ML in general, you can start to see the resemblance to human learning. Take the types of training that are used in ML:

- Supervised - Humans label data examples provided to the machine to help it see patterns and distinguish the differences… a series of pictures identified as dogs versus cats, for example.

- Unsupervised - Raw information is provided without context, allowing the machine to shuffle through and identify patterns and common features on its own. Given the same pictures of cats and dogs, the machine would eventually detect both similarities and differences and come to categorize the pictures in its own way.

- Reinforcement - Data is provided to the machine with or without labeling, but as it sorts and evaluates the information, a reward is provided when the classification made matches what the trainer wants to see… a point for every picture of a dog correctly labeled “dog” for instance.

You don’t have to squint too hard to see the similarities in how any applied behavioral analyst might approach retraining a stroke victim how to speak, or a dog to find victims in rubble.

If machines are to develop the kind of analytical and reasoning abilities that even begin to approach those biological systems, then machine learning is likely the method that is going to take them there. And that’s a boon to artificial intelligence overall… even if they’re not the same thing.