Written by Scott Wilson

Computer vision is a field that seems pretty self-defining: to give computers the tools to see. But what is sight? What do researchers mean when they talk about giving computers that ability?

At one end of the scale of interpretations, it’s the same as the capability of human sight: an ability to find meaning in visual input.

But at the other end, it’s a very different kind of capability than the one that we take for granted. What a computer “sees” when it examines a picture or video image is dramatically different from what we see. The blurry world of analog that brings smears of color and form to the human mind is translated into pinpoint pixels with sharply defined mathematical characteristics.

Despite the differences, though, computer vision is achieving great things in AI today. And it’s a key piece of the technological puzzle in building new artificial intelligence that can take in data from the world around it and engage it with reason and action as humans do.

What Is Computer Vision?

Computer vision takes in the big sweep of acquiring, analyzing, and interpreting images through digital computers. It’s also typically tied to some end goal of that process: how a robot should move its arm to pick up a cup, whether a picture contains a stop sign, whether a colored blob in the street is a plastic bag or a bouncing ball likely to be followed shortly by a small child.

…modeling the visual world in all of its rich complexity is far more difficult than, say, modeling the vocal tract that produces spoken sounds.

~ Richard Szeliski, Computer Vision: Algorithms and Applications

Computer vision takes in the big sweep of acquiring, analyzing, and interpreting images through digital computers. It’s also typically tied to some end goal of that process: how a robot should move its arm to pick up a cup, whether a picture contains a stop sign, whether a colored blob in the street is a plastic bag or a bouncing ball likely to be followed shortly by a small child.

- Sensors (the eyes), intricate organs capable of converting light into electrical signals varying with intensity and frequency

- Preprocessing cortexes (the occipital lobes) that take in those impulses and organize them into forms, objects, and indications of motion

- Perception processes (the temporal and parietal lobes) where recognition and spatial location are computed for further use by high-level brain functions

Visual processing is the largest system in the human brain. It’s also one of the most important.

So it’s not surprising that trying to rebuild it in digital form is one of the premier challenges in artificial intelligence.

Computer Vision Projects Have Grown Together Over Time

The field of computer vision today is actually a set of different technologies and techniques that have at times diverged or merged along the path toward dealing with different challenges. They include:

- Signal processing - A discipline that grew out of electrical engineering, it focuses on analysis and interpretation of different kinds of impulses into useful data. Many general techniques from this discipline are useful in computer vision.

- Image analysis - Image recognition and analysis are the outgrowth of early efforts to process two-dimensional visual information and digitize it.

- Machine vision - Industrial uses of camera equipment and computers led to the discipline of machine vision systems, which approaches visual processing from an applied engineering perspective. It often deals with more controlled environments and can take more simplistic approaches to producing useful information from imagery.

- Pattern recognition - Similar to signal processing, pattern recognition focuses on developing useful information from chaotic inputs. Relying primarily on statistical evaluation and predictions, it has been used in license plate and handwriting recognition systems.

- Photogrammetry - Related to mapping and geoscience, photogrammetry is the science of developing information about physical objects through sensor interpretation. Although this often comes through radar and lidar inputs, advances in photographic interpretation feed into computer vision in general.

What Is Machine Vision vs Computer Vision?

Just going by the name, you’d think a machine vision system and computer vision AI are basically the same thing. And as many people bring fresh eyes to AI concepts, the terms are more and more being used interchangeably.

Just going by the name, you’d think a machine vision system and computer vision AI are basically the same thing. And as many people bring fresh eyes to AI concepts, the terms are more and more being used interchangeably.

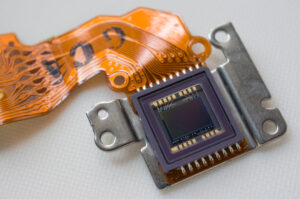

But that’s not how it started. Machine vision software grew out of industrial engineering, not artificial intelligence development. The invention of the charge-coupled camera in the 1970s created small, energy efficient cameras with semi-conductor-based sensors that converted photons directly to pixels. While the resolution of machine vision cameras wasn’t good, with the right lighting it was good enough for basic algorithms to assess very simple differences in images.

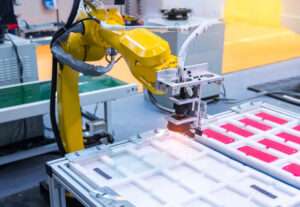

Coming at the perfect time to augment the industrial automation boom and support the first factory robotics systems, machine vision had its own professional trade association (the Automated Imaging Association, or AIA) as early as 1984. Machine vision systems inspected auto parts, checked fruits for spoilage, and read labels for sorting long before AI computer vision got out of bed.

Of course, as computer vision has become more capable and flexible, convolutional neural nets have found their way into machine vision systems as well. The future of the two technologies will merge in fact just as the terms are already being mingled by media.

Basic image scanning technology was invented in the 1960s. It was simple stuff, just digitizing and then replicating two-dimensional pictures, but it put that data into a digital format that could be further processed. Computer scientists began to apply lessons from the study of mammal visual processing systems, working on object recognition.

Handwriting and optical character recognition were soon to follow. By 2001, computers could be taught to recognize faces.

Today, cameras on smartphones can be used to read and translate from other languages. Self-driving vehicles spot obstacles, lane markers, and speed limit signs. And your favorite social media network plucks out pictures of friends to show you based on facial recognition algorithms driven by computer vision.

How Computer Vision Engineers Develop a Digital Version of Vision

AI engineers have approached the problems of teaching computers to see through a process inspired by how human vision works: feeding the input through vast neural networks, trained to discern piece by piece the important qualities and objects in the image.

AI engineers have approached the problems of teaching computers to see through a process inspired by how human vision works: feeding the input through vast neural networks, trained to discern piece by piece the important qualities and objects in the image.

Today, these are primarily convolutional neural networks (CNN): artificial neural networks created using deep learning for computer vision. They pass image data into a set of layers, each of which handles on a small part of the image and looks for specific characteristics before passing it along to the next.

This process is like the human vision system. Simpler features are recognized at earlier parts of the processing stream, slowly building to a more complete object as the data rises through the mind.

In some senses, these networks learn how to process images in a similar way to the brain, too. They are fed enormous amounts of different pictures, showing similar objects from different angles in different settings, under various lighting conditions, and in various poses.

As the early versions of this pass through the network, it concentrates on detecting edges and then discerning the shapes those edges create. Through the magic of deep learning, the visual processing algorithms can teach themselves what color is, what a dog looks like, how it’s different from (and similar to) a cow.

Of course, on its own it doesn’t know that green is green or that a dog is called a dog. For that, the training data must be labeled.

ImageNet: The Computer Vision Conference That Started It All

Machine learning researchers have developed new ways for AI to generate its own training materials. Synthetic data like that generated by the Unity computer vision system takes traditional game tools and uses it to fill training data. People comb IMDB (the Internet Movie Database) and Wikipedia for face images with names for this data.

Machine learning researchers have developed new ways for AI to generate its own training materials. Synthetic data like that generated by the Unity computer vision system takes traditional game tools and uses it to fill training data. People comb IMDB (the Internet Movie Database) and Wikipedia for face images with names for this data.

But first, there was ImageNet.

It started in 2006 when Professor Fei-Fei Li realized that the biggest roadblock in building better computer vision algorithms was training data: there weren’t enough labeled images of enough objects to really build an effective convolutional network.

There wasn’t an easy way to do it. Li needed not only a lot of pictures, but a lot of interpretation. Each image had to have a human categorize it before a computer could use it.

But it wasn’t an unprecedented problem, either. Something similar had happened with language, resulting in a database called WordNet: hierarchical classification of words, from general (“canine”) to specific (“husky”).

Li started there. A set of pictures matching each word would give computer vision models something to chew on.

Then she got creative. Using Amazon Mechanical Turk, she paid random people pennies per image to look at pictures and assign them to a label in WordNet. Multiple classifications were used to enhance accuracy. ImageNet was born. More than three million labeled images covered more than 5,000 conceptual categories.

In 2012, Geoffrey Hinton, Ilya Sutskever, and Alex Krizhevsky used a deep learning approach to train a computer vision algorithm on ImageNet data. At a competition that year, their algorithm blew other entries out of the water, with an error rate below 25 percent. By 2017, every team in the annual competition was below a 5 percent error rate.

ImageNet not only created a revolution in computer vision: arguably, the solution that Hinton, Sutskever, and Krizhevsky came up with led to the modern formulation of the technique of deep learning itself. All progress in modern AI may lead back to Li’s insight and efforts.

Computer Vision Projects Involve Combining Software Interpretation with Hardware Capabilities

One unique element of computer vision in artificial intelligence is how tied it is to physics and sensory hardware. Before a single pixel of an image can be processed, it must be captured and translated into digital format.

One unique element of computer vision in artificial intelligence is how tied it is to physics and sensory hardware. Before a single pixel of an image can be processed, it must be captured and translated into digital format.

The very process of image capture involves an understanding of optics and the characteristics of electromagnetic radiation they capture. Artifacts like lens flare or chromatic aberration have to be either avoided or accounted for in computer vision software.

But there are also big advantages in computer vision over the old-fashioned biological version due to hardware. Computers have no problem processing infrared or X-ray imagery just like any other picture. They can bring to bear many techniques for enhancing and resolving image issues before they even feed pictures into machine vision algorithms.

What Are the Biggest Computer Vision Applications?

The uses and potential for computer vision are nearly unlimited. It’s as close a thing as there is to a foundational technology for true artificial general intelligence and many points along the way there. Without an ability to view and assess the world of the real, computers will have a limited ability to learn and interact with the environment. Effective computer vision is a pillar in fields like:

- Robotics - Robotics is a clear field of application for computer vision. Mobile robots need to be able to pathfind through the world; robots of all types need references for interacting with their environment. Computer vision is also slowly folding in machine vision systems that inspect products and oversee automated manufacturing.

- Agriculture - Farm automation is another big area of interest in computer vision. Monitoring crops, animals, and detecting insect infestation and diseases are all areas where computer vision engineers are making progress in agriculture. Drone-based systems cover a lot of ground quickly and safely, allowing farmers to increase productivity and predict yields early.

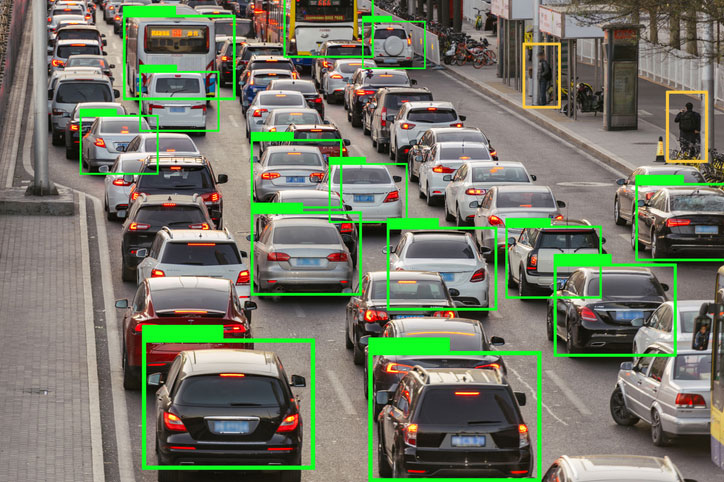

- Transportation - Self-driving cars couldn’t exist without computer vision, making this one of the most critical applications. Computer vision routines identify other vehicles, pedestrians, road signs and markings, and monitor drivers for signs of inattention or drowsiness. They are also used outside the vehicle to conduct traffic flow analysis and inspect road conditions.

- Security - Computer vision systems are also ubiquitous in another aspect of transportation systems: enforcement. Cameras monitor stop lights and school zones for violations, scan license plates to find wanted persons, and look for moving violations. Facial recognition plays a role in everything from border security to theft prevention. It’s an area of computer vision that has both big ethical and technical challenges that will drive computer vision jobs for decades.

- Healthcare - Specialized computer vision projects started early in the medical industry. With large image sets for training and rigorous data classification methods, it’s been a good fit for X-ray and MRI image recognition diagnosing everything from COVID-19 to cancer. New video-based computer vision systems are exploring uses in diagnosing and analyzing neurological and musculoskeletal diseases.

- Retail - Amazon made a splash in 2018 when it took a step out of virtual retailing and into the real world with Amazon Go convenience stores. They worked like a 7-Eleven, with a twist: customers didn’t need to check out. Instead, sophisticated computer vision systems tracked every item they picked up and simply charged their account as they left. By 2020, they had rolled the system out to grocery stores. Other retailers may follow. And, of course, many retail outlets already use computer vision in its security role, outlined above.

As a technology that is still in its infancy, computer vision can be expected to make big waves in many other fields eventually. With new capabilities and discoveries, all kinds of uses may emerge.

Computer Vision Models Give AI Access to Real World Data in Real-Time

Ultimately, though, the big goal of computer vision in the world of artificial intelligence is in allowing AI access to data.

Ultimately, though, the big goal of computer vision in the world of artificial intelligence is in allowing AI access to data.

As one of the senses that brings in the most information about the real world most rapidly, sight is one of the critical ways humans engage in reasoning, navigation, and interaction. And as far as we know, computer vision will be a key field in enabling AI to perform the same tasks… and more.

In fact, there are schools of thought that the development of human intelligence relies on sensory input. If that’s true, then the limiting factor in developing artificial general intelligence right now may be simply that it doesn’t have access to the same depths of experience.

The visual realm opens up vast streams of that sensory data. AI systems that can access and process it may be the path to a more capable AI future.

Computer Vision Courses at the College Level Are Where AI Engineers Master the Future of Sight

There is no shortage of dedicated college degree and certificate programs that dive into the complexities of AI and computer vision. Many Master of Science in Artificial Intelligence programs have specializations in Computer Vision. Even further along the spectrum are dedicated degree programs, the Master of Science in Computer Vision.

There is no shortage of dedicated college degree and certificate programs that dive into the complexities of AI and computer vision. Many Master of Science in Artificial Intelligence programs have specializations in Computer Vision. Even further along the spectrum are dedicated degree programs, the Master of Science in Computer Vision.

And more focused degrees are offered in practical professional applications, like the Master of Engineering for Computer Vision and Control or the Master of Science in Engineering Robotics with Specialization in Computer Vision.

The field is important enough and widespread enough that even a Master of Computer Science AI Concentration will offer electives that explore computer vision, though.

Educational Certificate Programs Deliver Focused College-Level Computer Vision Examples

While computer vision has been a going concern for longer than AI in general, there are still many people who went through their degree programs in math, computer science, or statistics without recognizing the opportunities.

For anyone who has a strong background in the elementary tools of machine learning, math, and statistics, certificate programs offer another path to computer vision expertise.

Graduate and postgraduate certificates in AI can offer a few classes that are hyper-focused on the subjects of interest. They are usually the same classes the same colleges use in their degree programs, with the same instructors. But rather than the big scope that comes with degree studies, you get laser-like training in computer vision specifically.

Certificates are offered at post-bachelor’s and post-master’s levels, so you will see options like a Visual Computing Graduate Certificate for post-bachelor studies, and Postgraduate Certificates in Remote Sensing and Image Processing for people who already have master’s degrees in a relevant field.

Computer Vision Course Options Combine Theory and Application

In both certificate and degree programs in computer vision you’ll get a mix of coursework that goes into both the science and engineering behind the field.

On the science side, you’re going to find the table laid with some cold, hard physics and computational theory:

- The Visual Spectrum

- Physics-based Methods in Vision

- Multiple View Geometry in Computer Vision

- Deep Reinforcement Learning Techniques

- Computer Graphics and Physics-based Rendering

- Machine Learning with Large Datasets

- Visual Learning and Recognition

When it comes to engineering, you’ll see how people are melding image input to AI models and training them to recognize objects and scenes. Classes can include:

- Deep Learning for Computer Vision

- Computer Vision Python Programming Techniques

- Probabilistic Graphical Models

- Multimedia Databases and Data Mining

- Robot Localization and Mapping

Either a capstone project or a thesis or dissertation will be a definite requirement for master’s and doctoral students.

Finally, particularly at advanced levels, computer vision projects are required pieces in most degree programs today. These are intended to synthesize learning over the course of the program, as well as involving new research and developing original approaches to computer vision problems.

It’s worth noting that some of the biggest leaps in computer visual processing have come out of motivated graduate student theses or dissertation projects. In any event, the final project selected by a student in their advanced degree program is bound to set the stage for their future career in computer vision.

Professional Certifications in Computer Vision Demonstrate Expertise

IABAC (International Association of Business Analytics Certification) offers the Certified Computer Vision Expert through a variety of American training partners. The cert covers key areas of functionality across the spectrum of computer vision, including:

- Image Filtering and Enhancement

- Image Transformation and Feature Extraction

- Deep Learning in Computer Vision

- Object Detection

- Video Analysis and Processing

Prerequisites for obtaining the certification are:

- Strong programming skills in Python, C++, or Java

- Advanced understanding of linear algebra and calculus

- Familiarity with basic image filtering and segmentation techniques

- ML and deep learning experience with neural networks

- Proficiency with common libraries like OpenCV (Open Computer Vision Python) and frameworks like TensorFlow

For that matter, other professional certifications that aren’t specific to computer vision, but are broadly useful in AI and ML may also be handy.

For example, the DeepLearning TensorFlow Professional Developer Certification testifies to your qualifications using that platform for various ML uses. Image recognition is one of the techniques you are tested on.

And Nvidia’s Jetson AI Certification includes training in using PyTorch in image classification tasks.

There are also other platform certifications; on big cloud platforms like Microsoft Azure, computer vision processes require careful management. Certification in those tools shows foundational knowledge for running those processes.

There are also other platform certifications; on big cloud platforms like Microsoft Azure, computer vision processes require careful management. Certification in those tools shows foundational knowledge for running those processes.

With computer vision expanding at the same pace as artificial intelligence generally, it’s very likely that new certs will be coming out all the time. The same way that the field of information technology has spun off dozens of sub-specialties and relevant certifications, computer vision may sprout new industry-standard certs for different applications or techniques.

The Future of Computer Vision Will Show the Path to Broader Artificial Intelligence

You may note also that these education options align with the three tracks that artificial intelligence careers in general are starting to align with in the United States:

- In-depth research and scientific exploration of core computer vision techniques, sensor, and computational methods. These careers and degrees are heavily rooted in theory and advancing the core state-of-the-art in all types of vision systems.

- Practical and business-oriented applications in specific industries and government roles. This track is more about taking new developments and capabilities from the first track and putting them to effective use in specific areas. Some of the earliest computer vision uses fall into this category, like the practice of machine reading handwritten ZIP codes for mail sorting.

- Highly specialized computer vision adaptations in sensitive and expert fields. These roles are quite common for computer vision experts, including those working in medical imaging, transportation and autonomous vehicles, and environmental monitoring through satellite imagery.

Computer vision will both continue to benefit from advances in core AI technology innovations like deep neural networks and practical uses like AutoML. At the same time, more capable computer vision will spur more useful AI and robotics systems with real-world utility. Computer science experts working in in-depth AI research and development roles will drive those developments.

Many of the most obvious places this will find practical application are areas that are already thriving, like medical diagnostics and autonomous vehicles – all roles for highly specialized AI experts working in fields where AI will be augmenting the work of skilled professionals. You can expect more and more capable and trustworthy AI systems driving by reliable computer vision routines in such areas.

But there will be even more advances that come along the practical and business-oriented track of AI engineering. On-device inference features in mobile phones will drive smart classification and advanced editing features out to the average user. Machine translation from writing will become faster and more ubiquitous, a tie-in between computer vision and natural language processing technologies.

While there is nothing formal about any of these tracks, you can often see how certain degrees and careers in computer vision line up with them. Still, there is nothing that prevents anyone with a particular kind of computer vision degree from taking a path into a different sort of career. And the reality is that AI is still in early days yet, both in terms of computer vision applications and other uses. Additional shifts are entirely possible.

One thing is sure in computer vision and AI, however: no artificial intelligence will truly be capable of understanding and interacting with the world until it can see that world as we do. Computer vision remains key to finding AI capable of full human reasoning and action.