Written by Scott Wilson

If you have been amazed or astonished at a picture AI has created, a conversation a chatbot has carried on, or how self-driving cars stay inside the lines, odds are good that you have deep learning to thank for it.

Of all the elemental research that has paid dividends in AI development over the past few years, deep learning has had the greatest impact. It’s both an outgrowth of broader study in machine learning in general, and an improvement on those basic techniques.

It’s also one of the elements of artificial intelligence that is most mysterious. Even the leading experts in the field can’t truly tell us what happens deep within the layers that make up an artificial neural network (ANN) built with deep learning techniques.

But no education in AI today is complete without a deep dive into deep learning.

Understanding Deep Learning Starts with Understanding Machine Learning

Understanding what deep learning is requires a trip down machine learning memory lane. So if you aren’t familiar with the field and concept of machine learning, your first stop in understanding deep learning needs to be our explainer on machine learning.

Understanding what deep learning is requires a trip down machine learning memory lane. So if you aren’t familiar with the field and concept of machine learning, your first stop in understanding deep learning needs to be our explainer on machine learning.

That’s because deep learning is one of many potential tools within the ML toolbox. It is an approach to representational learning that both uses core ML concepts and expands on them at the same time. Both deep learning and machine learning can be useful in artificial intelligence programming.

The development of multi-layered ML techniques is what has sent the field of AI soaring in recent years. But to understand those techniques, first you must understand how we got to them.

How Deep Learning AI Emerged at the Boundaries of Neuroscience and Computational Theory

For most people, deep learning traces its roots to the 1940s, when researchers studying the activity of neurons in the brain came to see the on/off firing of electrical impulses as analogous to the binary digital signals being used in the very first electronic computers being built. In fact, one of the first designers of such machines, John von Neumann, may have originally been inspired by the neural model.

For most people, deep learning traces its roots to the 1940s, when researchers studying the activity of neurons in the brain came to see the on/off firing of electrical impulses as analogous to the binary digital signals being used in the very first electronic computers being built. In fact, one of the first designers of such machines, John von Neumann, may have originally been inspired by the neural model.

Much of the early research in AI focused on such neural networks. A version called the perceptron was created by researcher Frank Rosenblatt in 1958. It showed one of the first proven examples of machine learning by being trained to distinguish cards with colored squares printed on them.

Despite such promising advances, though, Marvin Minsky and Seymour Papert published a mathematical proof in 1969 that the perceptron model could never adapt to certain basic patterns. Only a model with additional layers could accomplish such a thing… and no one knew how to build such a thing.

But hints of such ideas were coming. In 1986, Geoffrey Hinton used backpropagation, a method of feeding errors back into the algorithm, to show how multiple layers could be trained. And by the 1990s, practical systems using backpropagation in convolutional neural networks were able to recognize handwritten ZIP codes on envelopes. Unlike Rosenblatt’s perceptron, these systems could come to recognize key features in the data independently.

Computing Horsepower Was the Shot in the Arm That Deep Learning Models Needed to Fly

All this was happening as computational machinery was improving by leaps and bounds. The horsepower of modern computers and software turned out to be the secret sauce to training the hidden layers in the middle of deep neural networks (DNN). Millions or billions of data points improved performance to the point where the results could seem almost magical.

Our vision was that big data would change the way machine learning works. Data drives learning.

~ Doctor Fei-Fei Li, creator of the ImageNet training dataset

The availability of more data and faster processing speeds also unlocked new deep learning techniques. From straightforward feedforward neural nets, which pass data through once, recurrent neural networks loop output back to the start… giving them a sort of memory.

And this was enough to give them the tools to power generative systems. Probabilistic prediction of chunks of information, like phrases in sentences, could be used to paint pictures, write papers, compose sonatas, and teach robots to walk. That may leave you to wonder about the possibilities of what you could achieve with deep learning in your career?

How Does Deep Learning Work?

Naked machine learning techniques are powerful, but require considerable preparation and careful design to make use of. Data fed into ML algorithms had to be carefully cleaned and labeled to deliver meaningful results.

Naked machine learning techniques are powerful, but require considerable preparation and careful design to make use of. Data fed into ML algorithms had to be carefully cleaned and labeled to deliver meaningful results.

Initially, an ML algorithm fed an image for classification will have no built-in understanding of what data in that image is important for its purposes. Of all the many pixel points, which ones are the key for identifying objects? What sort of values distinguish them from all the other pixels in the picture? Is color important? How are the edges of objects determined and represented? What kind of three-dimensional space do they occupy?

Piece by piece, algorithms have to make thousands of determinations that the neural networks of a human brain handle almost effortlessly.

A single, extremely complex algorithm can be built to perform that function of discrimination. The basic linear classification of such algorithms works quickly, but crudely. It has little flexibility and must be carefully constructed to work with all the potential universes of pixel data it could be fed. Alternatively, it may only be expected to work in and on very restricted types of images… those with certain perspectives, lighting values, and so on.

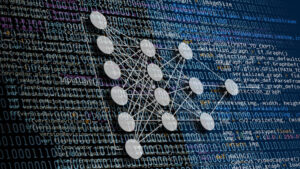

Deep learning offers a way to break down the steps of discrimination into many discrete but simple tasks. By arranging these steps in multiple layers (the “deep” in deep learning), researchers found that surprisingly complex attributes could be taught to the program.

Equally importantly, the process of teaching those functions didn’t rest with the ML engineers. Instead, the ML algorithms teach themselves the optimal structure from the data itself. By feeding data repeatedly through the layers, amazingly complex capabilities can emerge with very little supervision.

Machine Learning Researchers Are Pushing the Boundaries of Deep Neural Networks (DNN) Every Day

All that is just the start, however. By combining different types of deep neural networks, you can combine capabilities. For instance, a convolutional artificial neural network designed for image recognition can then pass its results on to a recurrent neural network that has been trained as a natural language generator to create a description of the scene that humans can read.

All that is just the start, however. By combining different types of deep neural networks, you can combine capabilities. For instance, a convolutional artificial neural network designed for image recognition can then pass its results on to a recurrent neural network that has been trained as a natural language generator to create a description of the scene that humans can read.

Put them the other way around, with an NLP deep neural network in front of a generative transformer trained on images. So you have tools like DALL-E, which can take a plain English description and create fantastic or realistic pictures instantly.

New research into a process called transfer learning is also helping to create artificial neural networks that can be used for multiple purposes. The deepest hidden layers in some ANNs may feed multiple uses. A model trained on CT scans for the purpose of detecting lung cancer tumors, for example, may develop generic abilities in processing CT imagery that could be reused for a separate application looking for kidney stones, or potentially even for airport security explosives detection.

What Can Deep Learning Be Used For?

So far we’ve name-dropped medical image processing, natural language processing and generation, and image generation as applications of deep neural networks. But that just scratches the surface.

If you just have a single problem to solve, then fine, go ahead and use a neural network.

~ Dr. Marvin Minsky

If you look under the hood at any kind of incredible AI system in the world today, you’ll find an artificial neural network humming away. Deep learning has been the Swiss army knife in the AI toolkit in recent years.

- Computer Vision - For machines to apply intelligence to the world around them, they must have some way to take in information about that environment. Vision, which most of take for granted, is an information-rich ability… but also one that is chaotic and hard to process. But deep learning has vaulted computer vision performance to new levels recently. This is such an important application that it gets its own entry in our discussion of AI technology.

- Natural Language Processing - If humans are to interact with artificial intelligence, language is a massive challenge. Binary is what machines speak. Getting to a halfway point like C++ or Java requires a lot of effort on both sides, and remains very limited in what it can express. So teaching machines to speak, hear, and read human languages is a prime target for deep learning systems. Like computer vision, you can find more about not only how deep learning is used, but how NLP in general works in a dedicated article.

- Recommendation Systems and Marketing – Although the dreams of AI tend toward more noble uses than just driving the engines of commerce, some of the earliest uses of deep learning have been figuring out what consumers are most likely to buy. Curating products by identifying hidden trends and tastes is likely to continue to be a big area for neural networks.

- Drug Discovery and Toxicology Assessment – It was big news in 2022 when Google’s AlphaFold won a $3 million breakthrough prize for solving AI protein folding predictions. That capability will help researchers discover and predict the effects of entirely new drugs. It came courtesy of deep learning that trained the tool on around 200 million proteins imaged through X-ray crystallography. Now, instead of laboriously X-raying such proteins one by one, tools like this can narrow the range of interesting structures and allow scientists to quickly create new medicines and therapies.

- Bioinformatics and Medical Diagnostics – Deep neural networks are already beating out radiologists when it comes to spotting tumors in lung tissue. Although medical imaging and bioinformatics are complex fields requiring years of education to become a practitioner, deep learning is quickly becoming a powerful tool for healthcare professionals. It may help seek out and identify genetic markers of disease, spot subtle signs predicting medical complications from electronic health records, and be used to double check against prescription medication conflicts.

- Financial Fraud Detection – As criminals have become more sophisticated and finance systems more complicated, banks and governments have turned to deep learning AI to detect suspect transactions.

- Materials Science – Similar to ways that deep neural networks can quickly and accurately explore chemical compounds for pharmaceuticals, they can also comb through molecular structures to look for other miracle materials for use in construction and manufacturing. In November of 2023, a DeepMind system was reported to have turned up more than 2 million new materials while looking for stable inorganic crystalline structures.

- Intelligence – People who know about how AI is being developed and applied in national intelligence and defense don’t talk about it. But with the world on the cusp of serious threats from cyberattacks, autonomous drone attacks, and other dark uses of AI, you can be sure there are people behind closed doors figuring out how deep learning can defend us.

Deep machine learning is also heavily used in data science. But when those disciplines intersect, you may as well just call it artificial intelligence.

New applications are emerging all the time.

Deep Learning Has Some Limitations

As incredible as deep learning techniques have proven to be, there are still some built-in limitations.

Deep Learning Produces Impenetrable Solutions

For starters, that inability to look into the deep middle layers and understand the transformers creates an element of uncertainty. How much can we truly rely on DNN-created answers? There’s always a possibility that it has learned a set of tricks that imitate the kind of reasoning it is intended to accomplish, but actually conceals fatal glitches that subtly corrupt the answers. Hallucinations and other corruptions are a regular feature of DNN-powered AI… and so far, no one knows how to fix them.

Deep Learning Is Costly and Time-Consuming

Deep learning today is a technology that requires deep pockets and a lot of patience. The vast amounts of data and the in-depth processing requirements of training mean that DNNs can require months of training time and millions of dollars worth of computational costs. They are often built in the cloud, using some of the fastest collections of processing power on the planet. It also means that deep learning isn’t a viable tool in applications where relevant data doesn’t exist or is too ambiguous to train with.

Ironically, because artificial neural networks use statistical methods to break down and process problems, they are particularly bad at questions with concrete, factual answers.

Deep Learning Doesn’t Engage in Reasoning the Way Humans Do

Finally, deep learning faces a fundamental problem of representation. As a method to apply reasoning ability, it is a set of geometric transformations that generate answers corresponding to human concepts. But they aren’t applying elementary logic in the way that humans can. You can see this whenever you ask a chatbot to solve a basic math problem. It can only answer through statistical reference to the words in its training data. No actual mathematical operations are performed. It can’t even recognize math as a category of operation to attempt.

The thing that I try to caution people the most is what we call the ‘hallucinations problem.’ The model will confidently state things as if they were facts that are entirely made up.

~ Sam Altman, CEO of OpenAI

Deep Learning Is Vulnerable to Adversarial Attacks

Exactly because deep learning systems do not develop an understanding of the material they process in the human sense, they are vulnerable to certain types of attacks that aim to corrupt or mislead them. Altering a few pixels scattered across an image of a camel will in no way prevent a human from correctly identifying it. But the same altered image fed to a pretrained artificial neural network (ANN) might cause it to think it’s a dolphin.

Limitations in Deep Learning Are Always Subject to the Next Generation of Researchers

Where there are limitations, there is also exploration. Ever since layered neural networks were pronounced dead and buried in 1969, there’s always been someone convinced they can overcome the conventional wisdom. And they have often been right.

Where there are limitations, there is also exploration. Ever since layered neural networks were pronounced dead and buried in 1969, there’s always been someone convinced they can overcome the conventional wisdom. And they have often been right.

Sometimes a problem will seem completely insurmountable. Then someone comes up with a simple new idea, or just a rearrangement of old ideas, that completely eliminates it.

~ Doctor Marvin Minsky

Similarly, there have been a series of discoveries within the field of deep learning that has propelled it to new heights in the last few years. New implementations of deep reinforcement learning like deep Q learning and deep residual learning for image recognition are being delivered to offer new capabilities all the time.

They join a history of successful breakthroughs that have brought deep learning as far as it has come already:

- Backpropagation - The idea of sending errors back through layers for additional training.

- Stochastic Gradient Descent - The iterative technique used to optimize neural network training.

- Dropout - A way to prevent overfitting by randomly dropping units and connections from networks during training

- Convolutional Neural Networks - A constricted method of building neural networks to prevent overfitting to training data

All of this takes a great deal of mathematical expertise. Command of vectors, gradients, and sigmoid functions are a must. Yet it turns out that the process of putting together these artificial neural networks is still significantly faster and easier than accomplishing the same feat with traditional machine learning techniques.

More and more complex techniques are being developed all the time. New deep learning approaches in artificial intelligence are just waiting for someone with the inspiration and talent to make them happen.

Deep Learning Courses Are Both Educational and Exploratory

Deep neural networks (DNN) are so integral to artificial intelligence and machine learning today that you won’t find an AI or ML degree program that doesn’t come with a deep learning course or several. While they are some of the most advanced ML processes in use today, even bachelor’s and associate degrees in AI and ML will introduce the theory and basic foundations used.

Deep neural networks (DNN) are so integral to artificial intelligence and machine learning today that you won’t find an AI or ML degree program that doesn’t come with a deep learning course or several. While they are some of the most advanced ML processes in use today, even bachelor’s and associate degrees in AI and ML will introduce the theory and basic foundations used.

Asking whether a modern degree in AI includes deep learning skills is like asking if a math degree includes algebra.

Master’s programs, particularly a master’s in machine learning, will go into far more detail. This is the level where students are challenged to dive into the details of building, training, and understanding deep learning systems. Going beyond, PhD candidates are deeply involved with research and development efforts to advance deep learning. Most of the techniques and processes used in the field today first surfaced as a thesis or dissertation project.

Although they are a foundational technology in modern AI and are widely used in data science, there are few degrees that offer specializations in deep learning itself. Where you find them, they are usually combined with a more specific application, such as with a Master of Science in Artificial Intelligence with a concentration in Deep Learning and Computer Vision.

But deep learning studies are often integral in ML programs and in other AI specializations like Natural Language Processing and Data Engineering.

Educational Certificates Provide Another Path to Develop Deep Learning with Python and Other Tools

You will also have better luck looking for graduate and postgraduate certificates in deep learning. Certificates are short, inexpensive, hyper-focused programs that colleges offer in specific techniques or technologies. They are usually designed to build on core knowledge you’ve received in a related degree field. That means the instruction doesn’t start from scratch, but also that you are free to narrowly approach a specific technology.

You will also have better luck looking for graduate and postgraduate certificates in deep learning. Certificates are short, inexpensive, hyper-focused programs that colleges offer in specific techniques or technologies. They are usually designed to build on core knowledge you’ve received in a related degree field. That means the instruction doesn’t start from scratch, but also that you are free to narrowly approach a specific technology.

So a Certificate in Machine Learning and Deep Learning or a Graduate Certificate in Deep Learning will take you step-by-step through the process of using deep learning to build your own artificial neural networks.

While they only have a few classes, you may find that some of them have the flexibility to tailor your coursework toward specific deep learning applications. Since that coursework is generally identical to the college classes at that level leading to AI or ML degrees, you can sometimes later count certificate progress toward one of those degrees.

Professional Certification Can Prove You Hold an Edge in Deep Learning Techniques

Professional certifications are an important kind of qualification in the technology industry. So you might expect that some of these valuable validations of your skills and knowledge would be available in deep learning.

Professional certification is different from an educational certificate in that it is less about educating you than validating your existing training and skills by experience and examination.

More often, however, you will find certifications that address deep learning only as a part of some other tool or technology. For example, one of the major open-source libraries used in building DNNs is Python’s TensorFlow. A TensorFlow Developer Certificate tests your skills in ML and deep learning, but only through the prism of using TensorFlow as the platform to build them. And it comes with both the courses to help you develop that expertise and the exam requirements to prove it.

IABAC, a European professional certifying body, does offer the Certified Deep Learning Expert credential. It’s available through a variety of American training organizations. And many of the various available machine learning professional certifications include some deep learning skills as part of their assessment.

Taking Deep Learning into the Future as a Cornerstone of Artificial Intelligence Development

Taking a dive into deep learning is still a very safe bet on the AI career front. With applications in every important area of artificial intelligence, a strong foundation in this technology is a ticket to careers in every industry.

Taking a dive into deep learning is still a very safe bet on the AI career front. With applications in every important area of artificial intelligence, a strong foundation in this technology is a ticket to careers in every industry.

While neural networks and deep learning may not be the ultimate tool to create artificial general intelligence, they may be one piece of the puzzle. In January of 2024, for example, according to the New York Times a breakthrough using AI to solve geometry problems was made by combining a deep learning-trained neural network with a symbolic reasoning engine.

And regardless of whether they play a part in the big game, they remain a powerful tool for creating many narrow AI systems. Engineers have barely scratched the surface in potential applications. And the power of deep learning continues to grow each day.